- Fast

- Isolated (compare to single VM - multiple services)

- Light-weight

- Define environment with code

docker run hello-world

Run a Linux container

docker run -it --rm centos

cat /etc/redhat-release

exit

Run a Python container

docker run -it --rm python:alpine

print('hello')

exit()

docker run <IMAGE:TAG> <COMMAND>

docker run -it --rm centos ls

Pull an image

docker pull alpine

Develop a simple API

mkdir backend

cd backend

npm init

npm install --save express

# Program

cat << EOF > index.js

const os = require('os')

const crypto = require('crypto');

const express = require('express')

const app = express()

app.get('/', function (req, res) {

// CPU intensive operation

var hash = 'start ' + Math.random();

for (var i = 0; i < 25600; i++) {

hash = crypto.createHmac('sha256', '000').update(hash).digest('hex');

}

res.send(os.hostname());

})

app.listen(3000, function () {

console.log('Listening on port 3000!');

})

process.on('SIGTERM', function() {

process.exit(0);

});

process.on('SIGINT', function() {

process.exit(0);

});

EOFRun server

nodejs index.js

Access from browser

Create a Dockerfile

cat << EOF > Dockerfile

FROM node:8-alpine

COPY package.json /app/package.json

COPY index.js /app/index.js

RUN cd /app && npm install

CMD ["node", "/app/index.js"]

EOFBuild an image

docker build -t <NAME>-backend .

Run

docker run -it --rm -p 80:3000 <NAME>-backend

Run in background

docker run -d -p 80:3000 <NAME>-backend

View running containers

docker ps

View all containers

docker ps -a

Attach to running container

docker attach <CONTAINER_ID_OR_NAME>

Stop container

docker stop <CONTAINER_ID_OR_NAME>

Delete container

docker rm <CONTAINER_ID_OR_NAME>

A container can have multiple processes, but when PID 1 exits, container exits.

docker run -d --name alpine alpine top

docker exec -it alpine sh

ps

kill 1

If have time

Push image to GCR

# Tag image

docker tag <NAME>-backend us.gcr.io/docker-k8s-workshop/<NAME>-backend:1

# Push

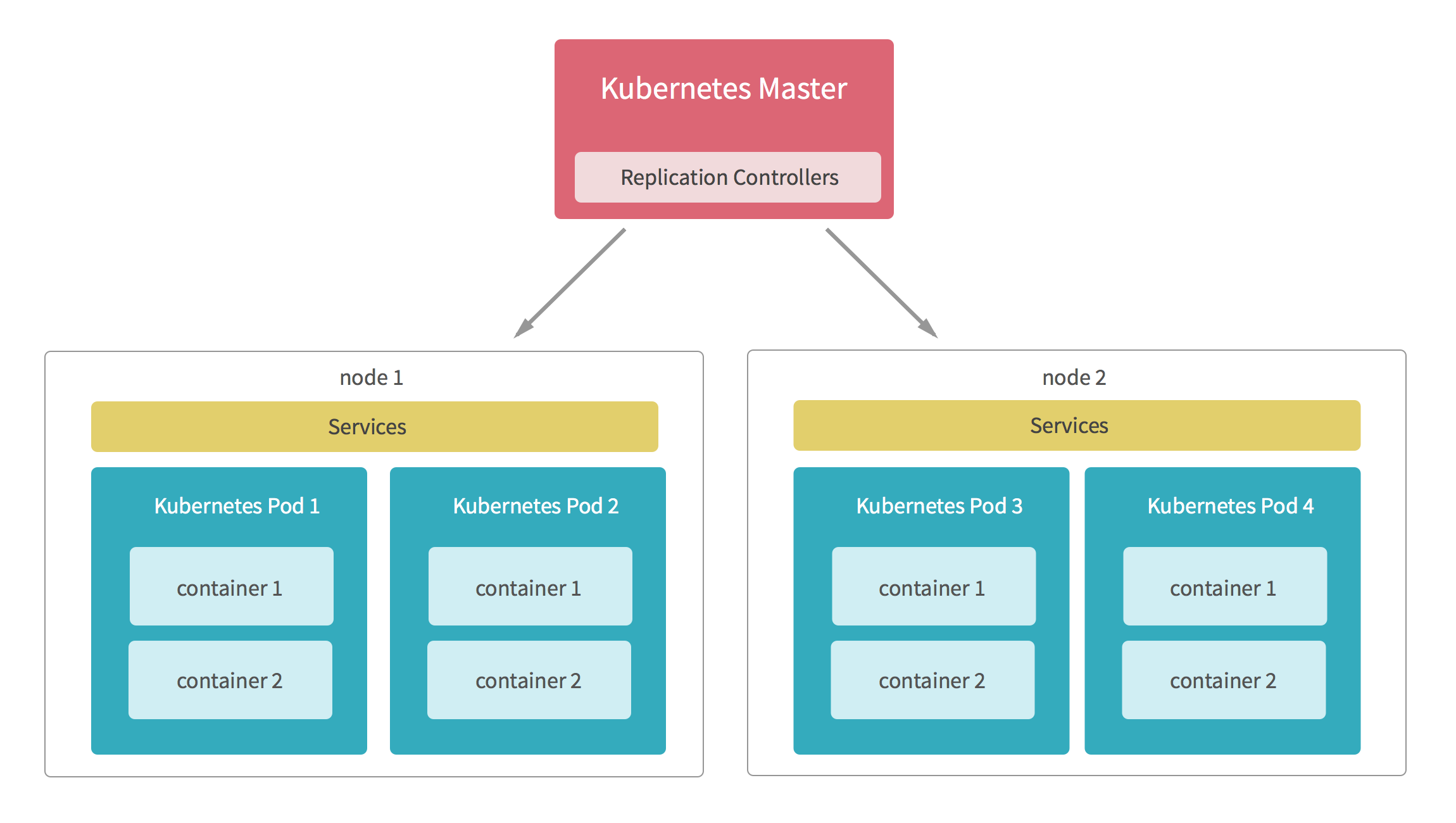

gcloud docker -- push us.gcr.io/docker-k8s-workshop/<NAME>-backend:1- Distributed workload

- Resilient service, problem detection / recovery

- Rolling update / rollback

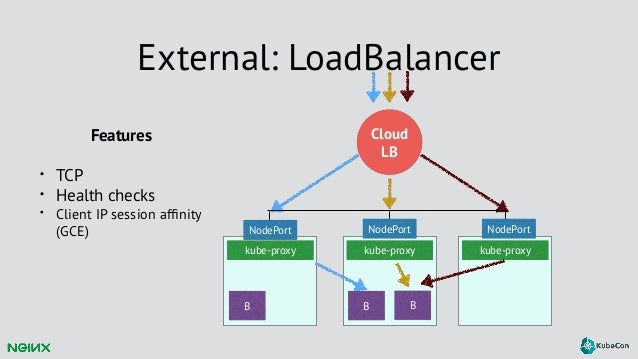

- Load balancing (Google Cloud Load Balancer)

- Service discovery (ClusterIP + DNS)

- Secret management

- Artifacts storage (GCR in this workshop)

- —

- Build + deploy pipeline

- HTTPS

- Monitoring

gcloud container clusters get-credentials workshop-cluster --zone us-east1-d --project docker-k8s-workshop

Show nodes

kubectl get nodes

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: <NAME>-backend

spec:

replicas: 1

template:

metadata:

labels:

app: <NAME>-backend

spec:

containers:

- name: <NAME>-backend

image: us.gcr.io/docker-k8s-workshop/<NAME>-backend:1

ports:

- containerPort: 8000Deploy!

kubectl apply -f kube.yaml

Check deployment just created

kubectl get deployments

Check Pods

kubectl get pods

Each pod has its own IP

kubectl get pods -o wide

Resize deployment (distributed workload)

spec:

replicas: 3Delete one pod (automatic recovery)

kubectl delete pod <POD_NAME>

---

apiVersion: v1

kind: Service

metadata:

name: <NAME>-backend

spec:

type: NodePort

selector:

app: <NAME>-backend

ports:

- name: <NAME>-backend-port

port: 3000

protocol: TCPCheck services

kubectl get services

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: <NAME>-backend

spec:

backend:

serviceName: <NAME>-backend

servicePort: <NAME>-backend-portCheck Ingress

kubectl get ingress

Access from browser.

cat << EOF > index.js

const os = require('os')

const crypto = require('crypto');

const express = require('express')

const app = express()

app.get('/', function (req, res) {

// CPU intensive operation

var hash = 'start ' + Math.random();

for (var i = 0; i < 25600; i++) {

hash = crypto.createHmac('sha256', '000').update(hash).digest('hex');

}

res.send('[v2] ' + os.hostname());

})

app.listen(3000, function () {

console.log('Listening on port 3000!');

})

process.on('SIGTERM', function() {

process.exit(0);

});

process.on('SIGINT', function() {

process.exit(0);

});

EOF

# Build

docker build -t <NAME>-backend .

# Tag

docker tag <NAME>-backend us.gcr.io/docker-k8s-workshop/<NAME>-backend:2

# Push

gcloud docker -- push us.gcr.io/docker-k8s-workshop/<NAME>-backend:1View current Pods

kubectl get pods

Update service

kubectl apply -f kube.yaml

View Pods again

kubectl get pods

kubectl rollout undo deployment <NAME>-backend

View result in browser

DNS Pods and Services - Kubernetes

my-svc.my-namespace.svc.cluster.local

Pod-level autoscaling: HorizontalPodAutoscaler scales the number of Pods based on observed CPU utilization.

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: <NAME>-backend

spec:

scaleTargetRef:

apiVersion: apps/v1beta1

kind: Deployment

name: <NAME>-backend

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 50Node-level autoscaling: GKE supports node-level autoscaling when current node pools can’t satisfy all Pod resource requests.

spec:

containers:

resources:

requests:

cpu: 100m

memory: 200Mi spec:

containers:

livenessProbe:

httpGet:

path: /

port: 3000

initialDelaySeconds: 5

periodSeconds: 10

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3 # 30 seconds

readinessProbe:

httpGet:

path: /

port: 3000

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 2

successThreshold: 1

failureThreshold: 2 # 10 seconds template:

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- <NAME>-backend

topologyKey: kubernetes.io/hostname

Thanks a lot~