-

-

Save cooldil/0b2c5ee22befbbfcdefd06c9cf2b7a98 to your computer and use it in GitHub Desktop.

| import requests, pickle | |

| from datetime import datetime | |

| import json, pytz | |

| import pandas as pd | |

| from influxdb import DataFrameClient | |

| login_url = "https://monitoring.solaredge.com/solaredge-apigw/api/login" | |

| panels_url = "https://monitoring.solaredge.com/solaredge-web/p/playbackData" | |

| SOLAREDGE_USER = "" # web username | |

| SOLAREDGE_PASS = "" # web password | |

| SOLAREDGE_SITE_ID = "" # site id | |

| DAILY_DATA = "4" | |

| WEEKLY_DATA = "5" | |

| INFLUXDB_IP = "" | |

| INFLUXDB_PORT = 8086 | |

| INFLUXDB_DATABASE = "" | |

| INFLUXDB_SERIES = "optimisers" | |

| INFLUXDB_RETENTION_POLICY = "autogen" | |

| session = requests.session() | |

| try: # Make sure the cookie file exists | |

| with open('solaredge.cookies', 'rb') as f: | |

| f.close() | |

| except IOError: # Create the cookie file | |

| session.post(login_url, headers = {"Content-Type": "application/x-www-form-urlencoded"}, data={"j_username": SOLAREDGE_USER, "j_password": SOLAREDGE_PASS}) | |

| panels = session.post(panels_url, headers = {"Content-Type": "application/x-www-form-urlencoded", "X-CSRF-TOKEN": session.cookies["CSRF-TOKEN"]}, data={"fieldId": SOLAREDGE_SITE_ID, "timeUnit": DAILY_DATA}) | |

| with open('solaredge.cookies', 'wb') as f: | |

| pickle.dump(session.cookies, f) | |

| f.close() | |

| with open('solaredge.cookies', 'rb') as f: | |

| session.cookies.update(pickle.load(f)) | |

| panels = session.post(panels_url, headers = {"Content-Type": "application/x-www-form-urlencoded", "X-CSRF-TOKEN": session.cookies["CSRF-TOKEN"]}, data={"fieldId": SOLAREDGE_SITE_ID, "timeUnit": DAILY_DATA}) | |

| if panels.status_code != 200: | |

| session.post(login_url, headers = {"Content-Type": "application/x-www-form-urlencoded"}, data={"j_username": SOLAREDGE_USER, "j_password": SOLAREDGE_PASS}) | |

| panels = session.post(panels_url, headers = {"Content-Type": "application/x-www-form-urlencoded", "X-CSRF-TOKEN": session.cookies["CSRF-TOKEN"]}, data={"fieldId": SOLAREDGE_SITE_ID, "timeUnit": DAILY_DATA}) | |

| if panels.status_code != 200: | |

| exit() | |

| with open('solaredge.cookies', 'wb') as f: | |

| pickle.dump(s.cookies, f) | |

| response = panels.content.decode("utf-8").replace('\'', '"').replace('Array', '').replace('key', '"key"').replace('value', '"value"') | |

| response = response.replace('timeUnit', '"timeUnit"').replace('fieldData', '"fieldData"').replace('reportersData', '"reportersData"') | |

| response = json.loads(response) | |

| data = {} | |

| for date_str in response["reportersData"].keys(): | |

| date = datetime.strptime(date_str, '%a %b %d %H:%M:%S GMT %Y') | |

| date = pytz.timezone('Australia/Adelaide').localize(date).astimezone(pytz.utc) | |

| for sid in response["reportersData"][date_str].keys(): | |

| for entry in response["reportersData"][date_str][sid]: | |

| if entry["key"] not in data.keys(): | |

| data[entry["key"]] = {} | |

| data[entry["key"]][date] = float(entry["value"].replace(",", "")) | |

| df = pd.DataFrame(data) | |

| conn = DataFrameClient(INFLUXDB_IP, INFLUXDB_PORT, "", "", INFLUXDB_DATABASE) | |

| conn.write_points(df, INFLUXDB_SERIES, retention_policy=INFLUXDB_RETENTION_POLICY) |

still usable because of "I am not a robot" checkbox at login at https://monitoring.solaredge.com/ ?

I am having trouble with python:

marc@gigabyte:~/dev/powerEdge$ python3 ./monitor.py

File "./monitor.py", line 29

return

^

SyntaxError: 'return' outside function

marc@gigabyte:~/dev/powerEdge$ python2 ./monitor.py

File "./monitor.py", line 29

return

SyntaxError: 'return' outside function

I am having trouble with python:

Made some changes to the code in order to make it handle new setups a bit more gracefully :-)

thanks for this update.

still unable to connect sur Web user interface at monitor.solaredge.com:

marc@gigabyte:~/dev/powerEdge$ python3 monitor2.py

Traceback (most recent call last):

File "monitor2.py", line 26, in <module>

with open('solaredge.cookies', 'rb') as f:

FileNotFoundError: [Errno 2] No such file or directory: 'solaredge.cookies'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/urllib3/connection.py", line 159, in _new_conn

conn = connection.create_connection(

File "/usr/lib/python3/dist-packages/urllib3/util/connection.py", line 61, in create_connection

for res in socket.getaddrinfo(host, port, family, socket.SOCK_STREAM):

File "/usr/lib/python3.8/socket.py", line 918, in getaddrinfo

for res in _socket.getaddrinfo(host, port, family, type, proto, flags):

socket.gaierror: [Errno -2] Name or service not known

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/urllib3/connectionpool.py", line 662, in urlopen

self._prepare_proxy(conn)

File "/usr/lib/python3/dist-packages/urllib3/connectionpool.py", line 950, in _prepare_proxy

conn.connect()

File "/usr/lib/python3/dist-packages/urllib3/connection.py", line 314, in connect

conn = self._new_conn()

File "/usr/lib/python3/dist-packages/urllib3/connection.py", line 171, in _new_conn

raise NewConnectionError(

urllib3.exceptions.NewConnectionError: <urllib3.connection.VerifiedHTTPSConnection object at 0x7fec33dcaa00>: Failed to establish a new connection: [Errno -2] Name or service not known

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/requests/adapters.py", line 439, in send

resp = conn.urlopen(

File "/usr/lib/python3/dist-packages/urllib3/connectionpool.py", line 719, in urlopen

retries = retries.increment(

File "/usr/lib/python3/dist-packages/urllib3/util/retry.py", line 436, in increment

raise MaxRetryError(_pool, url, error or ResponseError(cause))

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='monitoring.solaredge.com', port=443): Max retries exceeded with url: /solaredge-apigw/api/login (Caused by ProxyError('Cannot connect to proxy.', NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x7fec33dcaa00>: Failed to establish a new connection: [Errno -2] Name or service not known')))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "monitor2.py", line 29, in <module>

session.post(login_url, headers = {"Content-Type": "application/x-www-form-urlencoded"}, data={"j_username": SOLAREDGE_USER, "j_password": SOLAREDGE_PASS})

File "/usr/lib/python3/dist-packages/requests/sessions.py", line 581, in post

return self.request('POST', url, data=data, json=json, **kwargs)

File "/usr/lib/python3/dist-packages/requests/sessions.py", line 533, in request

resp = self.send(prep, **send_kwargs)

File "/usr/lib/python3/dist-packages/requests/sessions.py", line 646, in send

r = adapter.send(request, **kwargs)

File "/usr/lib/python3/dist-packages/requests/adapters.py", line 510, in send

raise ProxyError(e, request=request)

requests.exceptions.ProxyError: HTTPSConnectionPool(host='monitoring.solaredge.com', port=443): Max retries exceeded with url: /solaredge-apigw/api/login (Caused by ProxyError('Cannot connect to proxy.', NewConnectionError('<urllib3.connection.VerifiedHTTPSConnection object at 0x7fec33dcaa00>: Failed to establish a new connection: [Errno -2] Name or service not known')))

good work, thanks

Have two questions tough:

- even if I have 30 optimizers, only 21 optimizers are read out and written into database.

- as result of the script I get panel IDs in the database. Any idea how to correlate with the serial of the optimizer?

To get it to write into an influxDB 2.0 I had to change the writing process:

client=InfluxDBClient(url=INFLUXDB_IP, token = INFLUXDB_TOKEN, org=INFLUXDB_ORG)

write_api = client.write_api(write_options=SYNCHRONOUS)

write_api.write(bucket=INFLUXDB_BUCKET, org=INFLUXDB_ORG, record=df, data_frame_measurement_name = "optimizer")

thank you

wolferl

- as result of the script I get panel IDs in the database. Any idea how to correlate with the serial of the optimizer?

It's a bit of a manual and cumbersome process :-) At least the only way I could figure out how to do so was by using the Dev Tools -> Network capture from within a browser and looking at the "request URL" when querying for the "info" of each individual component (inverter/module).

- Navigate to https://monitoring.solaredge.com and logon

- Navigate to the (logical) layout of your system

- Press F12 (to bring up the Dev tools on most browsers) and switch over to the Network tab

- Right click on the "component" that you want to determine the "ID" of and select Info

- Within the captured data in the Dev Tools pane, look for the entry where the Request URL begins with "https://monitoring.solaredge.com/solaredge-web/p/systemData?reporterId=#########". The ######### value is the ID of the component as seen by the script. The Info page that opened up on the monitoring site should have the Serial # list. You can use the two pieces of data to map one to the other....

I did the above for all 35 of my panels, the inverter itself and the three string objects and then I inserted the code (one line for each component) below between lines 42 and 43.

current line 42 response = response.replace('timeUnit', '"timeUnit"').replace('fieldData', '"fieldData"').replace('reportersData', '"reportersData"')

new line 43 response = response.replace('########1', 'Component #1 Friendly name')

new line 44 response = response.replace('########2', 'Component #2 Friendly name')

... additional line for each addtional component ID to friendly name mapping

current line 43 response = json.loads(response)

- even if I have 30 optimizers, only 21 optimizers are read out and written into database.

Hmm... not sure what is going on there. Are you seeing all 30 optimizers/modules when viewing the physical/logical layout via the Solaredge site? I personally have 35 modules/optimizers on a single inverter spread out across three stings. The code returns (35+1+3=39) component values for me just fine...

Hi

thank you for the fast response!

- even if I have 30 optimizers, only 21 optimizers are read out and written into database.

I must correct myself: I have one inverter, one string (I have only one) and 30 optimizers. At the portal I see all panels and have already copied the ID and serials to match them in the future.

It seems kind of random. Today I sent the same query and got all data (inverter, string and all panels). 10min later I got only the inverter itself and 5min later I got only a couple of optimizers... very odd

Another question:

I compared the values from the script with the values in the portal and could not find any match. Now, I'm a bit confused. What do we get here? Energy or Power values? And do you have a clue, why the portal delivers different values to the script?

Thank you!

Another question: I compared the values from the script with the values in the portal and could not find any match. Now, I'm a bit confused. What do we get here? Energy or Power values? And do you have a clue, why the portal delivers different values to the script?

The script returns the Power values (technically averaged over a 15 minute period since that is a granularity that SE provides via the monitoring site). Since the values are only updated/published very 15 minutes or so, there is no value in running the script more often than every 15 minutes.

Run the script over a 24 hour period and see if the data stored in InfluxDB matches what is on the SE site....

Hi

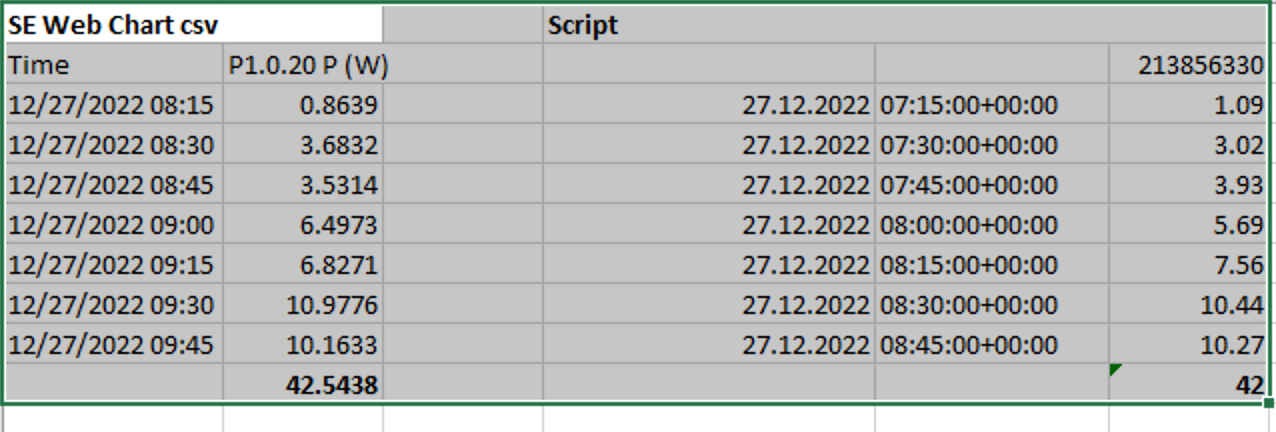

thank you for the advice. Tested the script results (before Influx) against the Solar Edge Web Portal (CSV export). It's quite similar, but not exactly the same. I assume it's some different roundings... See below:

I'm really not familiar with python, could you give me a hint where at the script you choose the data to be extracted and could the other data (Energy, Current, Voltage Panel, Voltage Optimizer) as well be extracted?

Merry christmay

Hi @cooldil I'm wondering whether you happen to have a list of API parameters that I could use alongside those that you programmed in this GIST. Or, could you confirm that these are the only ones?

Hi

I am wondering how to find this url on dashboard. Thanks!

panels_url = "https://monitoring.solaredge.com/solaredge-web/p/playbackData"

Hi I am wondering how to find this url on dashboard. Thanks! panels_url = "https://monitoring.solaredge.com/solaredge-web/p/playbackData"

The URL you referenced is called when you change over to the Layout area and then click on "Show playback". This brings up a "slider view" where you can see what the output of each of your panels was for any given 15 minute interval.

Thanks for your reply. It works well for me!

Another question, it seems that we are only able to retrive data on current day. Cause the "Show playback" can't display historical daily data. Am I right? And do you have any idea to retrive historical panel data. Thanks.

the ouptput dataframe's column names are be like:

197469474 | 197469507 | 197469573 | 197469606

I am wondering how to match it with the module number on the dashboard. Thanks again!

excellent job ! thanks.