Currently, only data within the last 90 days is available via the OpenAQ API. However, there is much more data available on OpenAQ and a variety of different access mechanisms. Note also that there is work under way to bring back to the API a mechanism to access the data older than 90 days, details here.

If you're looking to query across all the data or even easily export the data (or a subset of it), the easiest way to do that currently is using a service like Amazon Athena. I'll provide some directions on how to do that below, but at a high level, this will let you make any query of the entire dataset that you'd like (written in SQL). I'll also provide some sample queries so you can see what's possible.

On to the directions!

-

You will need to create an AWS account if you don't currently have one, you can start this process at https://aws.amazon.com/free/. Note that while an AWS account does not cost any money, running a query using Athena will. At currernt OpenAQ volume sizes, querying the whole dataset costs ~$.01.

-

Log in to your AWS account and go to the Athena console at https://console.aws.amazon.com/athena/home?region=us-east-1. Please make sure you're in the US East (N. Virginia) region as this will make your queries faster and save OpenAQ money on data transfer. You can confirm this by looking at the region name in the top right of the console.

-

There is a lot more documentation on Athena here, but you should now be looking at the main console where you can enter any query. But first, you'll need to create a table on top of the OpenAQ data. To do this, you can copy and paste the table definition in the openaq.ddl file that's also in the gist. Copy and paste that and click Run Query. In the Results pane at the bottom of the window, you should see

Query Successful. -

That's it, now you're ready to make any query you'd like! I'll provide some examples below to get you started.

A simple query to just get all the data for a particular location (or city or country)

SELECT *

FROM openaq

WHERE location='US Diplomatic Post: Hanoi'The average of all pm25 measurements for Baruun 4 zam location in Mongolia

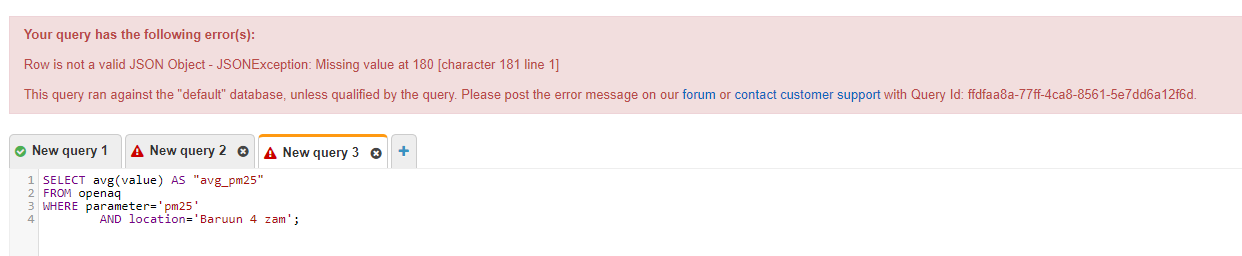

SELECT avg(value) AS "avg_pm25"

FROM openaq

WHERE parameter='pm25'

AND location='Baruun 4 zam';The number of measurements over 35 µg/m³ in India

SELECT count(*) AS "num_over"

FROM openaq

WHERE parameter='pm25'

AND value > 35

AND country = 'IN'Top 10 highest pm25 measurements sorted by highest and then date

SELECT *

FROM openaq

WHERE parameter='pm25'

ORDER BY value desc, date.utc DESC limit 10Athena also has geospatial support which means you can do things like ask for all the measurements around a certain latitude and longitude

SELECT *

FROM openaq

WHERE ST_WITHIN(ST_POINT(coordinates.longitude, coordinates.latitude), ST_BUFFER(ST_POINT(4.773,52.428), 0.1));-

The great thing about this mechanism is that you don't need to duplicate any data, you simply query using Athena and it runs on the latest data that OpenAQ maintains.

-

Athena has the full power of SQL behind it, so you have a ton of power to write any query you want, you're not limited by an API that OpenAQ creates. More details on SQL in general here.

-

If you're looking to use the data from these queries outside of visual inspection in Athena, you can click the 'Download CSV' button on the top of the Results pane. The CSV results will also be stored in an Amazon S3 bucket on your account which you can programatically access if you'd like.

-

Note that the underlying data you pointed to may change its structure as we figure out the most performant way to make the data avaible. We'll announce any changes and if that does happen, you'd just need to copy/paste another

openaq.ddltype file that'd update your table.