Last active

August 29, 2023 12:19

-

-

Save mikesparr/7a34c308c98837a390c8899d1450f2f2 to your computer and use it in GitHub Desktop.

Demonstration on how to register non-Google Kubernetes clusters with Anthos Connect Hub

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| #!/usr/bin/env bash | |

| export PROJECT_ID=$(gcloud config get-value project) | |

| export PROJECT_USER=$(gcloud config get-value core/account) # set current user | |

| export PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)") | |

| export IDNS=${PROJECT_ID}.svc.id.goog # workflow identity domain | |

| export GCP_REGION="us-central1" # CHANGEME (OPT) | |

| export GCP_ZONE="us-central1-a" # CHANGEME (OPT) | |

| export NETWORK_NAME="default" | |

| # enable apis | |

| gcloud services enable compute.googleapis.com \ | |

| container.googleapis.com \ | |

| gkeconnect.googleapis.com \ | |

| gkehub.googleapis.com \ | |

| cloudresourcemanager.googleapis.com \ | |

| iam.googleapis.com | |

| # configure gcloud sdk | |

| gcloud config set compute/region $GCP_REGION | |

| gcloud config set compute/zone $GCP_ZONE | |

| # create GKE cluster (using Standard) [1 node per zone: 3 total] | |

| export CLUSTER_NAME="central1" | |

| gcloud beta container --project $PROJECT_ID clusters create $CLUSTER_NAME \ | |

| --region $GCP_REGION \ | |

| --release-channel "regular" \ | |

| --workload-pool=$IDNS \ | |

| --num-nodes 1 | |

| ########################################### | |

| # NAT GATEWAY (optional if you do not have external IP for VM) | |

| ########################################### | |

| export NAT_GW_IP="nat-gw-ip" | |

| export CLOUD_ROUTER_NAME="router-1" | |

| export CLOUD_ROUTER_ASN="64523" | |

| export NAT_GW_NAME="nat-gateway-1" | |

| # create IP address | |

| gcloud compute addresses create $NAT_GW_IP --region $GCP_REGION | |

| # create cloud router and nat gateway | |

| gcloud compute routers create $CLOUD_ROUTER_NAME \ | |

| --network $NETWORK_NAME \ | |

| --asn $CLOUD_ROUTER_ASN \ | |

| --region $GCP_REGION | |

| gcloud compute routers nats create $NAT_GW_NAME \ | |

| --router=$CLOUD_ROUTER_NAME \ | |

| --region=$GCP_REGION \ | |

| --auto-allocate-nat-external-ips \ | |

| --nat-all-subnet-ip-ranges \ | |

| --enable-logging | |

| # change to static IP (test) | |

| gcloud compute routers nats update $NAT_GW_NAME \ | |

| --router=$CLOUD_ROUTER_NAME \ | |

| --nat-external-ip-pool=$NAT_GW_IP | |

| ########################################### | |

| # K3S server (single compute engine instance) | |

| # can use --no-address if kubectl run in bastion within private network | |

| ########################################### | |

| # create VM for installing k3s | |

| export K3S_INSTANCE="k3s-cluster-1" | |

| gcloud beta compute --project=$PROJECT_ID instances create $K3S_INSTANCE \ | |

| --zone=$GCP_ZONE \ | |

| --machine-type=e2-medium \ | |

| --subnet=$NETWORK_NAME \ | |

| --maintenance-policy=MIGRATE \ | |

| --service-account=155863595817-compute@developer.gserviceaccount.com \ | |

| --scopes=https://www.googleapis.com/auth/devstorage.read_only,https://www.googleapis.com/auth/logging.write,https://www.googleapis.com/auth/monitoring.write,https://www.googleapis.com/auth/servicecontrol,https://www.googleapis.com/auth/service.management.readonly,https://www.googleapis.com/auth/trace.append \ | |

| --tags=k8s,k3s \ | |

| --image=ubuntu-2004-focal-v20210908 \ | |

| --image-project=ubuntu-os-cloud \ | |

| --boot-disk-size=10GB \ | |

| --boot-disk-type=pd-balanced \ | |

| --boot-disk-device-name=$K3S_INSTANCE \ | |

| --no-shielded-secure-boot \ | |

| --shielded-vtpm \ | |

| --shielded-integrity-monitoring \ | |

| --labels=role=k8s \ | |

| --reservation-affinity=any | |

| # add firewall rule to allow access to port 6443 for apiserver | |

| gcloud compute --project=$PROJECT_ID firewall-rules create k3s-allow-apiserver \ | |

| --direction=INGRESS \ | |

| --priority=1000 \ | |

| --network=default \ | |

| --action=ALLOW \ | |

| --rules=tcp:6443 \ | |

| --source-ranges=0.0.0.0/0 \ | |

| --target-tags=k3s | |

| # instead of startup script, just SSH into instance and install k3s | |

| # ref: https://headworq.eu/en/how-to-install-k3s-kubernetes-on-ubuntu/ | |

| # | |

| gcloud compute ssh $K3S_INSTANCE --zone $GCP_ZONE | |

| # sudo apt update -y | |

| # curl -sfL https://get.k3s.io | sh - | |

| # check if k3s is running | |

| # sudo kubectl get nodes | |

| # NAME STATUS ROLES AGE VERSION | |

| # k3s-cluster-1 Ready control-plane,master 6m30s v1.21.4+k3s1 | |

| ############################################## | |

| # Register GKE cluster(s) | |

| # REF: https://cloud.google.com/anthos/multicluster-management/connect/registering-a-cluster | |

| # REF: https://cloud.google.com/anthos/multicluster-management/connect/prerequisites | |

| # REF: https://cloud.google.com/anthos/docs/setup/attached-clusters | |

| ############################################## | |

| # verify you are admin of cluster | |

| kubectl auth can-i '*' '*' --all-namespaces | |

| # yes | |

| # verify workload identity enabled (GKE clusters) | |

| gcloud container clusters describe $CLUSTER_NAME --region $GCP_REGION --format="value(workloadIdentityConfig.workloadPool)" | |

| # grant user permissions to register cluster | |

| gcloud projects add-iam-policy-binding $PROJECT_ID \ | |

| --member user:$PROJECT_USER \ | |

| --role=roles/gkehub.admin \ | |

| --role=roles/iam.serviceAccountAdmin \ | |

| --role=roles/iam.serviceAccountKeyAdmin \ | |

| --role=roles/resourcemanager.projectIamAdmin | |

| # register the GKE cluster | |

| export MEMBERSHIP_NAME_GKE="gke-$CLUSTER_NAME" # diff name for each | |

| gcloud container hub memberships register $MEMBERSHIP_NAME_GKE \ | |

| --gke-cluster=$GCP_REGION/$CLUSTER_NAME \ | |

| --enable-workload-identity | |

| ############################################## | |

| # Register K3S cluster (each one) | |

| # REF: https://cloud.google.com/anthos/multicluster-management/connect/registering-a-cluster | |

| # REF: https://cloud.google.com/anthos/multicluster-management/connect/prerequisites | |

| # REF: https://cloud.google.com/anthos/docs/setup/attached-clusters | |

| ############################################## | |

| # service account | |

| export SA_NAME="miketest-k3s" | |

| export SA_EMAIL="${SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" | |

| gcloud iam service-accounts create $SA_NAME \ | |

| --display-name "${SA_NAME}" | |

| # grant SA permissions to register cluster | |

| export MEMBERSHIP_NAME_K3S="k3s-cluster-1" # diff name for each | |

| gcloud projects add-iam-policy-binding $PROJECT_ID \ | |

| --member serviceAccount:$SA_EMAIL \ | |

| --role=roles/gkehub.connect \ | |

| --condition "expression=resource.name == \ | |

| 'projects/${PROJECT_ID}/locations/global/memberships/${MEMBERSHIP_NAME_K3S}',\ | |

| title=bind-${SA_NAME}-to-${MEMBERSHIP_NAME_K3S}" | |

| # download service account key | |

| export KEY_FILE="client.json" | |

| gcloud iam service-accounts keys create $KEY_FILE \ | |

| --iam-account=$SA_EMAIL | |

| # **** NOTE ON CLUSTER IN SHELL: on the k3s VM, open the config file **** | |

| # sudo kubectl config view --flatten > k3s.config | |

| # vi k3s.config and change 127.0.0.1:6443 server to IP discoverable within network | |

| # copy contents and save as local file k3s-config.yaml | |

| # register the k3s cluster | |

| export K3S_CONTEXT="k3s" | |

| export K3S_KUBECONFIG="k3s-config.yaml" | |

| export KUBECONFIG=~/.kube/config:$K3S_KUBECONFIG # add to local context | |

| gcloud container hub memberships register $MEMBERSHIP_NAME_K3S \ | |

| --context=$K3S_CONTEXT \ | |

| --kubeconfig=$K3S_KUBECONFIG \ | |

| --service-account-key-file=$KEY_FILE | |

| # authenticate (login) to the remote cluster | |

| # REF: https://cloud.google.com/anthos/multicluster-management/console/logging-in | |

| export KSA_NAME="k3s-anthos-agent" | |

| export VIEW_BINDING_NAME="anthos-agent-view" | |

| export ADMIN_BINDING_NAME="anthos-agent-admin" # optional | |

| export CLOUD_CONSOLE_READER_BINDING_NAME="anthos-agent-reader" | |

| kubectl --context $K3S_CONTEXT create serviceaccount $KSA_NAME | |

| kubectl --context $K3S_CONTEXT create clusterrolebinding $VIEW_BINDING_NAME \ | |

| --clusterrole view --serviceaccount default:$KSA_NAME | |

| kubectl --context $K3S_CONTEXT create clusterrolebinding $ADMIN_BINDING_NAME \ | |

| --clusterrole cluster-admin --serviceaccount default:$KSA_NAME | |

| kubectl --context $K3S_CONTEXT create clusterrolebinding $CLOUD_CONSOLE_READER_BINDING_NAME \ | |

| --clusterrole cloud-console-reader --serviceaccount default:${KSA_NAME} | |

| # fetch the KSA token to use for console login for cluster | |

| SECRET_NAME=$(kubectl --context $K3S_CONTEXT get serviceaccount $KSA_NAME -o jsonpath='{$.secrets[0].name}') | |

| kubectl --context $K3S_CONTEXT get secret ${SECRET_NAME} -o jsonpath='{$.data.token}' | base64 --decode | |

| # copy the token and paste in gcp console form field | |

| # CONGRATULATIONS!!! |

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Anthos Connect Hub

This demo illustrates how you can register non-GKE clusters and view/manage them from within Google Cloud Console using Anthos Connect Hub. To illustrate, this single project (fleet, formerly environ) includes a GKE cluster called

central1and a VM with k3s single-node cluster installed on it, but both accessible from cloud console.Results

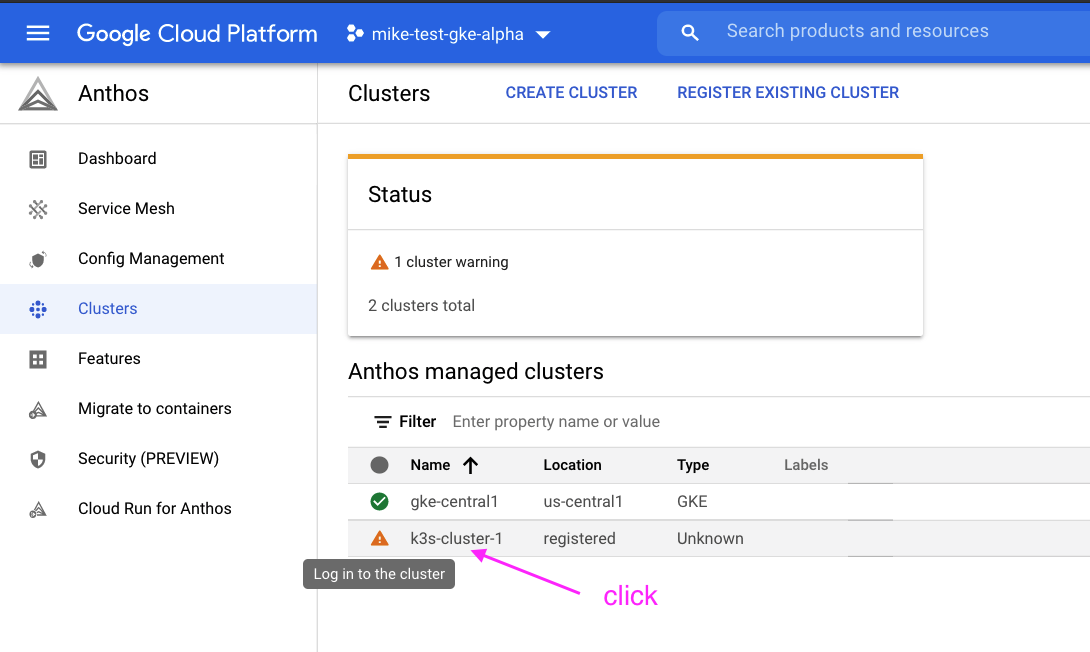

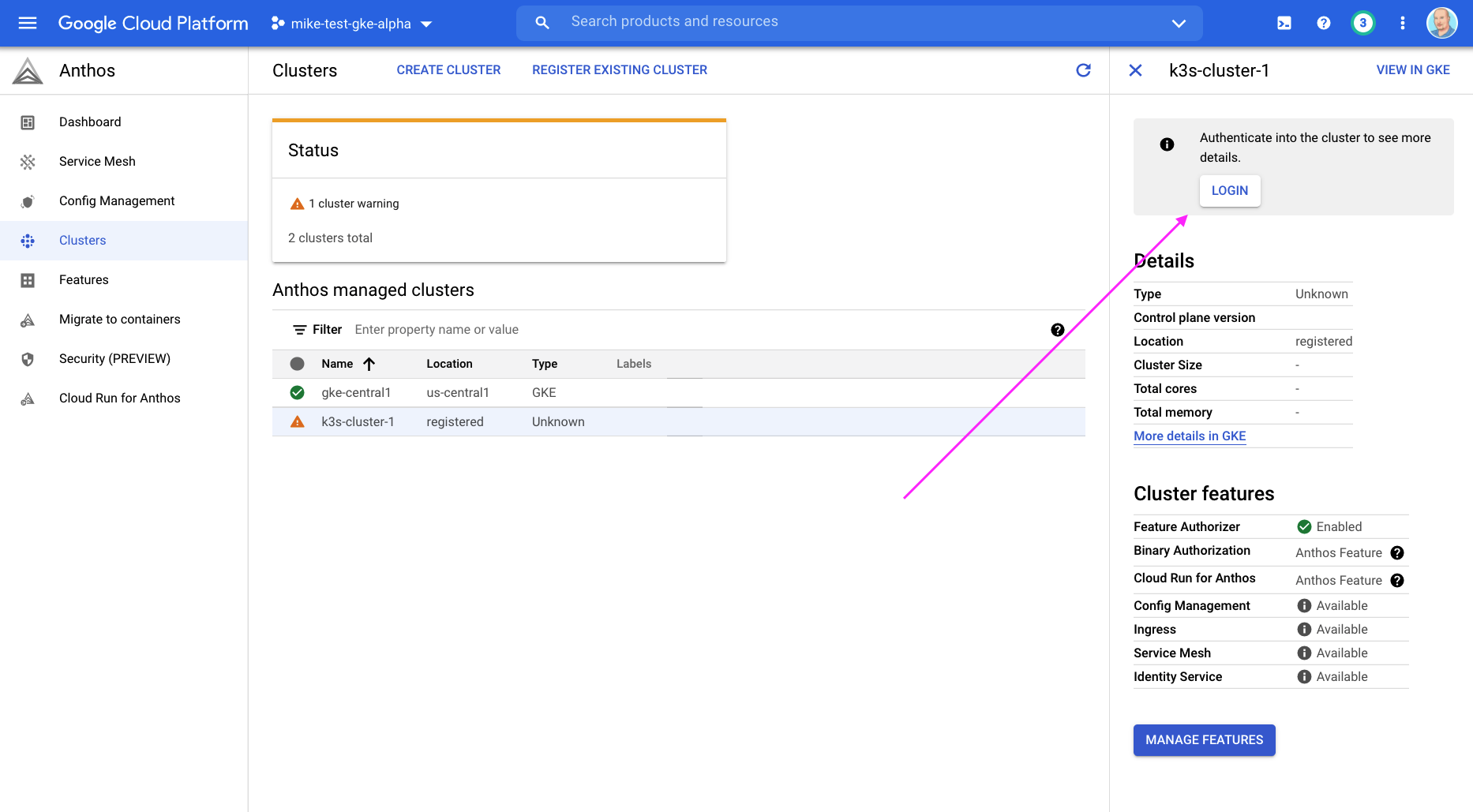

Logging into remote cluster

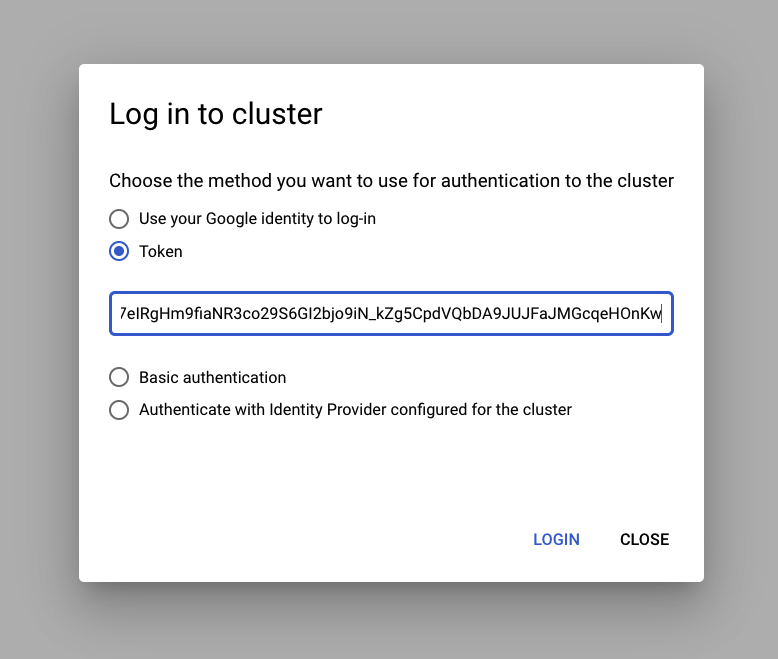

After following steps above (make note some expect to be run on the remote cluster in SSH session), and registering external clusters, you will need to authenticate GCP to that cluster. After you print out the auth token for the SA you created, paste into console as follows:

Click on cluster name to reveal side-panel on right

Click on login after copying token generated in terminal

Choose "token" and paste in token and save

Repeat as necessary

You will change the

MEMBERSHIP_NAMEfor each cluster (how they display in list of registered clusters), and then following to commands and login steps for each.Manage / monitor your clusters from GKE console

After your clusters are registered, they will all appear in the GKE console and you can interact with them.