This document describes a new addressing scheme for Monero.

Chapters 1-2 are intended for general audience.

Chapters 3-7 contain technical specifications.

- 1. Introduction

- 2. Features

- 3. Notation

- 4. Wallets

- 5. Addresses

- 6. Address encoding

- 7. Test vectors

- References

- Appendix A: Checksum

Sometime in 2024, Monero plans to adopt a new transaction protocol called Seraphis [1], which enables much larger ring sizes than the current RingCT protocol. However, due to a different key image construction, Seraphis is not compatible with CryptoNote addresses. This means that each user will need to generate a new set of addresses from their existing private keys. This provides a unique opportunity to vastly improve the addressing scheme used by Monero.

The CryptoNote-based addressing scheme [2] currently used by Monero has several issues:

- Addresses are not suitable as human-readable identifiers because they are long and case-sensitive.

- Too much information about the wallet is leaked when scanning is delegated to a third party.

- Generating subaddresses requires view access to the wallet. This is why many merchants prefer integrated addresses [3].

- View-only wallets need key images to be imported to detect spent outputs [4].

- Subaddresses that belong to the same wallet can be linked via the Janus attack [5].

- The detection of outputs received to subaddresses is based on a lookup table, which can sometimes cause the wallet to miss outputs [6].

Jamtis is a new addressing scheme that was developed specifically for Seraphis and tackles all of the shortcomings of CryptoNote addresses that were mentioned above. Additionally, Jamtis incorporates two other changes related to addresses to take advantage of this large upgrade opportunity:

- A new 16-word mnemonic scheme called Polyseed [7] that will replace the legacy 25-word seed for new wallets.

- The removal of integrated addresses and payment IDs [8].

Jamtis addresses, when encoded as a string, start with the prefix xmra and consist of 196 characters. Example of an address: xmra1mj0b1977bw3ympyh2yxd7hjymrw8crc9kin0dkm8d3wdu8jdhf3fkdpmgxfkbywbb9mdwkhkya4jtfn0d5h7s49bfyji1936w19tyf3906ypj09n64runqjrxwp6k2s3phxwm6wrb5c0b6c1ntrg2muge0cwdgnnr7u7bgknya9arksrj0re7whkckh51ik

There is no "main address" anymore - all Jamtis addresses are equivalent to a subaddress.

Jamtis introduces a short recipient identifier (RID) that can be calculated for every address. RID consists of 25 alphanumeric characters that are separated by underscores for better readability. The RID for the above address is regne_hwbna_u21gh_b54n0_8x36q. Instead of comparing long addresses, users can compare the much shorter RID. RIDs are also suitable to be communicated via phone calls, text messages or handwriting to confirm a recipient's address. This allows the address itself to be transferred via an insecure channel.

Jamtis introduces new wallet tiers below view-only wallet. One of the new wallet tiers called "FindReceived" is intended for wallet-scanning and only has the ability to calculate view tags [9]. It cannot generate wallet addresses or decode output amounts.

View tags can be used to eliminate 99.6% of outputs that don't belong to the wallet. If provided with a list of wallet addresses, this tier can also link outputs to those addresses. Possible use cases are:

A wallet can have a "FindReceived" component that stays connected to the network at all times and filters out outputs in the blockchain. The full wallet can thus be synchronized at least 256x faster when it comes online (it only needs to check outputs with a matching view tag).

If the "FindReceived" private key is provided to a 3rd party, it can preprocess the blockchain and provide a list of potential outputs. This reduces the amount of data that a light wallet has to download by a factor of at least 256. The third party will not learn which outputs actually belong to the wallet and will not see output amounts.

Jamtis introduces new wallet tiers that are useful for merchants.

This tier is intended for merchant point-of-sale terminals. It can generate addresses on demand, but otherwise has no access to the wallet (i.e. it cannot recognize any payments in the blockchain).

This wallet tier combines the Address generator tier with the ability to also view received payments (including amounts). It is intended for validating paid orders. It cannot see outgoing payments and received change.

Jamtis supports full view-only wallets that can identify spent outputs (unlike legacy view-only wallets), so they can display the correct wallet balance and list all incoming and outgoing transactions.

Janus attack is a targeted attack that aims to determine if two addresses A, B belong to the same wallet. Janus outputs are crafted in such a way that they appear to the recipient as being received to the wallet address B, while secretly using a key from address A. If the recipient confirms the receipt of the payment, the sender learns that they own both addresses A and B.

Jamtis prevents this attack by allowing the recipient to recognize a Janus output.

Jamtis addresses and outputs contain an encrypted address tag which enables a more robust output detection mechanism that does not need a lookup table and can reliably detect outputs sent to arbitrary wallet addresses.

- The function

BytesToInt256(x)deserializes a 256-bit little-endian integer from a 32-byte input. - The function

Int256ToBytes(x)serialized a 256-bit integer to a 32-byte little-endian output.

The function Hb(k, x) with parameters b, k, refers to the Blake2b hash function [10] initialized as follows:

- The output length is set to

bbytes. - Hashing is done in sequential mode.

- The Personalization string is set to the ASCII value "Monero", padded with zero bytes.

- If the key

kis notnull, the hash function is initialized using the keyk(maximum 64 bytes). - The input

xis hashed.

The function SecretDerive is defined as:

SecretDerive(k, x) = H32(k, x)

Two elliptic curves are used in this specification:

- Curve25519 - a Montgomery curve. Points on this curve include a cyclic subgroup

𝔾1. - Ed25519 - a twisted Edwards curve. Points on this curve include a cyclic subgroup

𝔾2.

Both curves are birationally equivalent, so the subgroups 𝔾1 and 𝔾2 have the same prime order ℓ = 2252 + 27742317777372353535851937790883648493. The total number of points on each curve is 8ℓ.

Curve25519 is used exclusively for the Diffie-Hellman key exchange [11].

Only a single generator point B is used:

| Point | Derivation | Serialized (hex) |

|---|---|---|

B |

generator of 𝔾1 |

0900000000000000000000000000000000000000000000000000000000000000 |

Private keys for Curve25519 are 32-byte integers denoted by a lowercase letter d. They are generated using the following KeyDerive1(k, x) function:

d = H32(k, x)d[31] &= 0x7f(clear the most significant bit)d[0] &= 0xf8(clear the least significant 3 bits)- return

d

All Curve25519 private keys are therefore multiples of the cofactor 8, which ensures that all public keys are in the prime-order subgroup. The multiplicative inverse modulo ℓ is calculated as d-1 = 8*(8*d)-1 to preserve the aforementioned property.

Public keys (elements of 𝔾1) are denoted by the capital letter D and are serialized as the x-coordinate of the corresponding Curve25519 point. Scalar multiplication is denoted by a space, e.g. D = d B.

The Edwards curve is used for signatures and more complex cryptographic protocols [12]. The following three generators are used:

| Point | Derivation | Serialized (hex) |

|---|---|---|

G |

generator of 𝔾2 |

5866666666666666666666666666666666666666666666666666666666666666 |

U |

Hp("seraphis U") |

126582dfc357b10ecb0ce0f12c26359f53c64d4900b7696c2c4b3f7dcab7f730 |

X |

Hp("seraphis X") |

4017a126181c34b0774d590523a08346be4f42348eddd50eb7a441b571b2b613 |

Here Hp refers to an unspecified hash-to-point function.

Private keys for Ed25519 are 32-byte integers denoted by a lowercase letter k. They are generated using the following function:

KeyDerive2(k, x) = H64(k, x) mod ℓ

Public keys (elements of 𝔾2) are denoted by the capital letter K and are serialized as 256-bit integers, with the lower 255 bits being the y-coordinate of the corresponding Ed25519 point and the most significant bit being the parity of the x-coordinate. Scalar multiplication is denoted by a space, e.g. K = k G.

The function BlockEnc(s, x) refers to the application of the Twofish [13] permutation using the secret key s on the 16-byte input x. The function BlockDec(s, x) refers to the application of the inverse permutation using the key s.

"Base32" in this specification referes to a binary-to-text encoding using the alphabet xmrbase32cdfghijknpqtuwy01456789. This alphabet was selected for the following reasons:

- The order of the characters has a unique prefix that distinguishes the encoding from other variants of "base32".

- The alphabet contains all digits

0-9, which allows numeric values to be encoded in a human readable form. - Excludes the letters

o,l,vandzfor the same reasons as the z-base-32 encoding [14].

Each wallet consists of two main private keys and a timestamp:

| Field | Type | Description |

|---|---|---|

km |

private key | wallet master key |

kvb |

private key | view-balance key |

birthday |

timestamp | date when the wallet was created |

The master key km is required to spend money in the wallet and the view-balance key kvb provides full view-only access.

The birthday timestamp is important when restoring a wallet and determines the blockchain height where scanning for owned outputs should begin.

Standard Jamtis wallets are generated as a 16-word Polyseed mnemonic [7], which contains a secret seed value used to derive the wallet master key and also encodes the date when the wallet was created. The key kvb is derived from the master key.

| Field | Derivation |

|---|---|

km |

BytesToInt256(polyseed_key) mod ℓ |

kvb |

kvb = KeyDerive1(km, "jamtis_view_balance_key") |

birthday |

from Polyseed |

Multisignature wallets are generated in a setup ceremony, where all the signers collectively generate the wallet master key km and the view-balance key kvb.

| Field | Derivation |

|---|---|

km |

setup ceremony |

kvb |

setup ceremony |

birthday |

setup ceremony |

Legacy pre-Seraphis wallets define two private keys:

- private spend key

ks - private view-key

kv

Legacy standard wallets can be migrated to the new scheme based on the following table:

| Field | Derivation |

|---|---|

km |

km = ks |

kvb |

kvb = KeyDerive1(km, "jamtis_view_balance_key") |

birthday |

entered manually |

Legacy wallets cannot be migrated to Polyseed and will keep using the legacy 25-word seed.

Legacy multisignature wallets can be migrated to the new scheme based on the following table:

| Field | Derivation |

|---|---|

km |

km = ks |

kvb |

kvb = kv |

birthday |

entered manually |

There are additional keys derived from kvb:

| Key | Name | Derivation | Used to |

|---|---|---|---|

dfr |

find-received key | kfr = KeyDerive1(kvb, "jamtis_find_received_key") |

scan for received outputs |

dua |

unlock-amounts key | kid = KeyDerive1(kvb, "jamtis_unlock_amounts_key") |

decrypt output amounts |

sga |

generate-address secret | sga = SecretDerive(kvb, "jamtis_generate_address_secret") |

generate addresses |

sct |

cipher-tag secret | ket = SecretDerive(sga, "jamtis_cipher_tag_secret") |

encrypt address tags |

The key dfr provides the ability to calculate the sender-receiver shared secret when scanning for received outputs. The key dua can be used to create a secondary shared secret and is used to decrypt output amounts.

The key sga is used to generate public addresses. It has an additional child key sct, which is used to encrypt the address tag.

The following figure shows the overall hierarchy of wallet keys. Note that the relationship between km and kvb only applies to standard (non-multisignature) wallets.

| Tier | Knowledge | Off-chain capabilities | On-chain capabilities |

|---|---|---|---|

| AddrGen | sga |

generate public addresses | none |

| FindReceived | dfr |

recognize all public wallet addresses | eliminate 99.6% of non-owned outputs (up to § 5.3.5), link output to an address (except of change and self-spends) |

| ViewReceived | dfr, dua, sga |

all | view all received except of change and self-spends (up to § 5.3.14) |

| ViewAll | kvb |

all | view all |

| Master | km |

all | all |

This wallet tier can generate public addresses for the wallet. It doesn't provide any blockchain access.

Thanks to view tags, this tier can eliminate 99.6% of outputs that don't belong to the wallet. If provided with a list of wallet addresses, it can also link outputs to those addresses (but it cannot generate addresses on its own). This tier should provide a noticeable UX improvement with a limited impact on privacy. Possible use cases are:

- An always-online wallet component that filters out outputs in the blockchain. A higher-tier wallet can thus be synchronized 256x faster when it comes online.

- Third party scanning services. The service can preprocess the blockchain and provide a list of potential outputs with pre-calculated spend keys (up to § 5.2.4). This reduces the amount of data that a light wallet has to download by a factor of at least 256.

This level combines the tiers AddrGen and FindReceived and provides the wallet with the ability to see all incoming payments to the wallet, but cannot see any outgoing payments and change outputs. It can be used for payment processing or auditing purposes.

This is a full view-only wallet than can see all incoming and outgoing payments (and thus can calculate the correct wallet balance).

This tier has full control of the wallet.

There are 3 global wallet public keys. These keys are not usually published, but are needed by lower wallet tiers.

| Key | Name | Value |

|---|---|---|

Ks |

wallet spend key | Ks = kvb X + km U |

Dua |

unlock-amounts key | Dua = dua B |

Dfr |

find-received key | Dfr = dfr Dua |

Jamtis wallets can generate up to 2128 different addresses. Each address is constructed from a 128-bit index j. The size of the index space allows stateless generation of new addresses without collisions, for example by constructing j as a UUID [15].

Each Jamtis address encodes the tuple (K1j, D2j, D3j, tj). The first three values are public keys, while tj is the "address tag" that contains the encrypted value of j.

The three public keys are constructed as:

K1j = Ks + kuj U + kxj X + kgj GD2j = daj DfrD3j = daj Dua

The private keys kuj, kxj, kgj and daj are derived as follows:

| Keys | Name | Derivation |

|---|---|---|

kuj |

spend key extensions | kuj = KeyDerive2(sga, "jamtis_spendkey_extension_u" || j) |

kxj |

spend key extensions | kxj = KeyDerive2(sga, "jamtis_spendkey_extension_x" || j) |

kgj |

spend key extensions | kgj = KeyDerive2(sga, "jamtis_spendkey_extension_g" || j) |

daj |

address keys | daj = KeyDerive1(sga, "jamtis_address_privkey" || j) |

Each address additionally includes an 18-byte tag tj = (j', hj'), which consists of the encrypted value of j:

j' = BlockEnc(sct, j)

and a 2-byte "tag hint", which can be used to quickly recognize owned addresses:

hj' = H2(sct, "jamtis_address_tag_hint" || j')

TODO

TODO

TODO

Jamtis has a small impact on transaction size.

The size of 2-output transactions is increased by 28 bytes. The encrypted payment ID is removed, but the transaction needs two encrypted address tags t~ (one for the recipient and one for the change). Both outputs can use the same value of De.

Since there are no "main" addresses anymore, the TX_EXTRA_TAG_PUBKEY field can be removed from transactions with 3 or more outputs.

Instead, all transactions with 3 or more outputs will require one 50-byte tuple (De, t~) per output.

An address has the following overall structure:

| Field | Size (bits) | Description |

|---|---|---|

| Header | 30* | human-readable address header (§ 6.2) |

K1 |

256 | address key 1 |

D2 |

255 | address key 2 |

D3 |

255 | address key 3 |

t |

144 | address tag |

| Checksum | 40* | (§ 6.3) |

* The header and the checksum are already in base32 format

The address starts with a human-readable header, which has the following format consisting of 6 alphanumeric characters:

"xmra" <version char> <network type char>

Unlike the rest of the address, the header is never encoded and is the same for both the binary and textual representations. The string is not null terminated.

The software decoding an address shall abort if the first 4 bytes are not 0x78 0x6d 0x72 0x61 ("xmra").

The "xmra" prefix serves as a disambiguation from legacy addresses that start with "4" or "8". Additionally, base58 strings that start with the character x are invalid due to overflow [16], so legacy Monero software can never accidentally decode a Jamtis address.

The version character is "1". The software decoding an address shall abort if a different character is encountered.

| network char | network type |

|---|---|

"t" |

testnet |

"s" |

stagenet |

"m" |

mainnet |

The software decoding an address shall abort if an invalid network character is encountered.

The purpose of the checksum is to detect accidental corruption of the address. The checksum consists of 8 characters and is calculated with a cyclic code over GF(32) using the polynomial:

x8 + 3x7 + 11x6 + 18x5 + 5x4 + 25x3 + 21x2 + 12x + 1

The checksum can detect all errors affecting 5 or fewer characters. Arbitrary corruption of the address has a chance of less than 1 in 1012 of not being detected. The reference code how to calculate the checksum is in Appendix A.

An address can be encoded into a string as follows:

address_string = header + base32(data) + checksum

where header is the 6-character human-readable header string (already in base32), data refers to the address tuple (K1, D2, D3, t), encoded in 910 bits, and the checksum is the 8-character checksum (already in base32). The total length of the encoded address 196 characters (=6+182+8).

While the canonical form of an address is lower case, when encoding an address into a QR code, the address should be converted to upper case to take advantage of the more efficient alphanumeric encoding mode.

TODO

TODO

- https://github.com/UkoeHB/Seraphis

- https://github.com/monero-project/research-lab/blob/master/whitepaper/whitepaper.pdf

- monero-project/meta#299 (comment)

- https://www.getmonero.org/resources/user-guides/view_only.html

- https://web.getmonero.org/2019/10/18/subaddress-janus.html

- monero-project/monero#8138

- https://github.com/tevador/polyseed

- monero-project/monero#7889

- monero-project/research-lab#73

- https://eprint.iacr.org/2013/322.pdf

- https://cr.yp.to/ecdh/curve25519-20060209.pdf

- https://ed25519.cr.yp.to/ed25519-20110926.pdf

- https://www.schneier.com/wp-content/uploads/2016/02/paper-twofish-paper.pdf

- http://philzimmermann.com/docs/human-oriented-base-32-encoding.txt

- https://en.wikipedia.org/wiki/Universally_unique_identifier

- https://github.com/monero-project/monero/blob/319b831e65437f1c8e5ff4b4cb9be03f091f6fc6/src/common/base58.cpp#L157

# Jamtis address checksum algorithm

# cyclic code based on the generator 3BI5PLC1

# can detect 5 errors up to the length of 994 characters

GEN=[0x1ae45cd581, 0x359aad8f02, 0x61754f9b24, 0xc2ba1bb368, 0xcd2623e3f0]

M = 0xffffffffff

def jamtis_polymod(data):

c = 1

for v in data:

b = (c >> 35)

c = ((c & 0x07ffffffff) << 5) ^ v

for i in range(5):

c ^= GEN[i] if ((b >> i) & 1) else 0

return c

def jamtis_verify_checksum(data):

return jamtis_polymod(data) == M

def jamtis_create_checksum(data):

polymod = jamtis_polymod(data + [0,0,0,0,0,0,0,0]) ^ M

return [(polymod >> 5 * (7 - i)) & 31 for i in range(8)]

# test/example

CHARSET = "xmrbase32cdfghijknpqtuwy01456789"

addr_test = (

"xmra1mj0b1977bw3ympyh2yxd7hjymrw8crc9kin0dkm8d3"

"wdu8jdhf3fkdpmgxfkbywbb9mdwkhkya4jtfn0d5h7s49bf"

"yji1936w19tyf3906ypj09n64runqjrxwp6k2s3phxwm6wr"

"b5c0b6c1ntrg2muge0cwdgnnr7u7bgknya9arksrj0re7wh")

addr_data = [CHARSET.find(x) for x in addr_test]

addr_enc = addr_data + jamtis_create_checksum(addr_data)

addr = "".join([CHARSET[x] for x in addr_enc])

print(addr)

print("len =", len(addr))

print("valid =", jamtis_verify_checksum(addr_enc))

Here are some additions to my proposal:

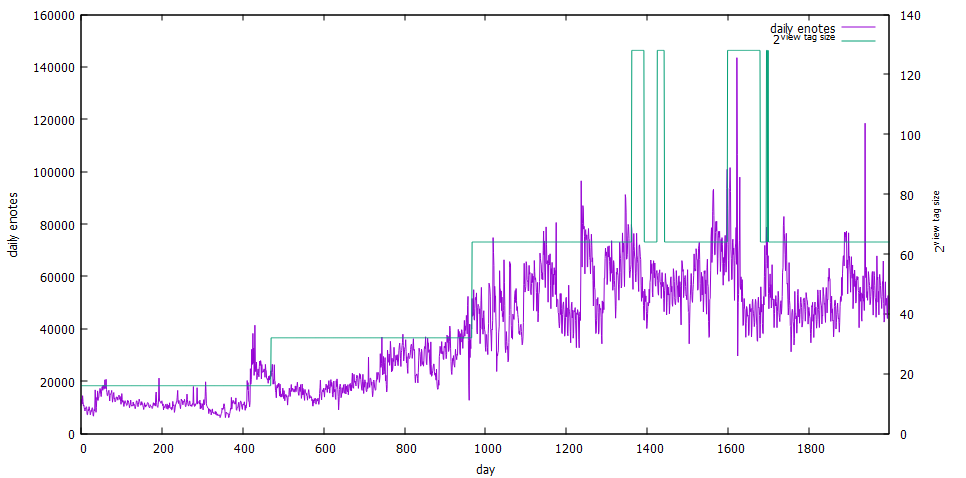

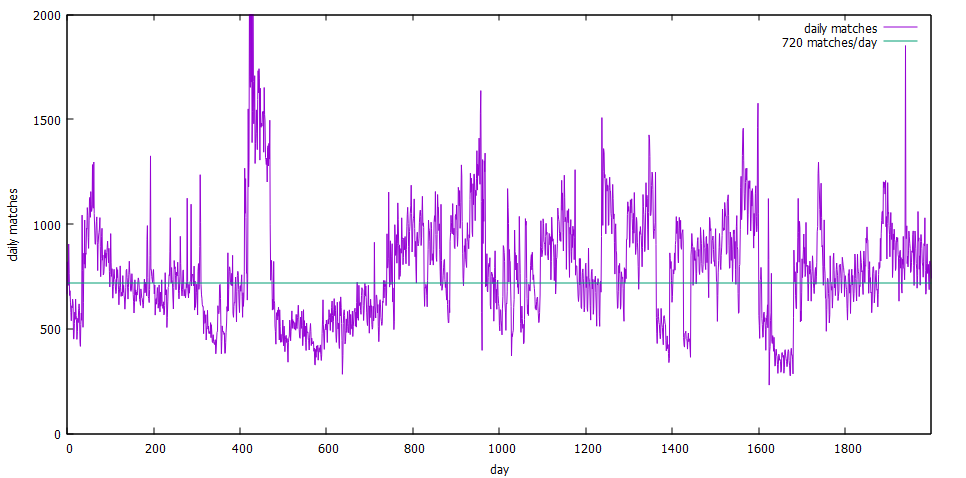

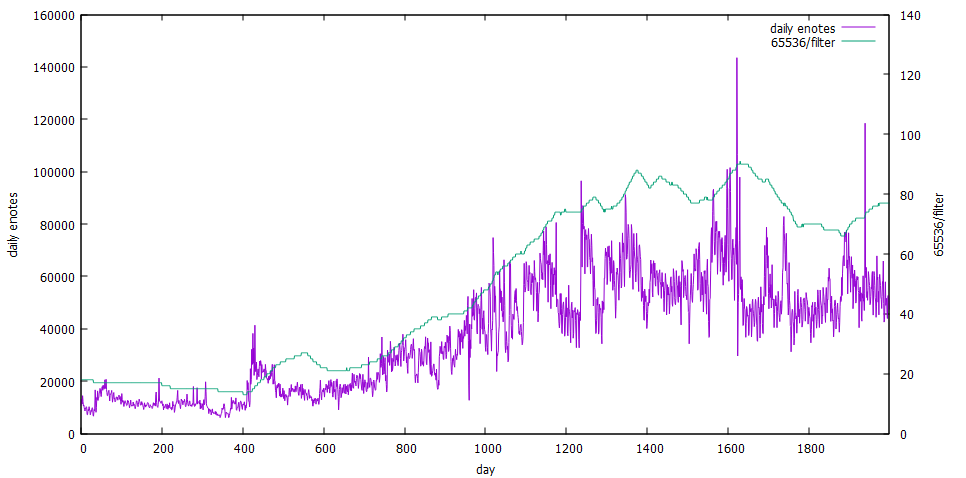

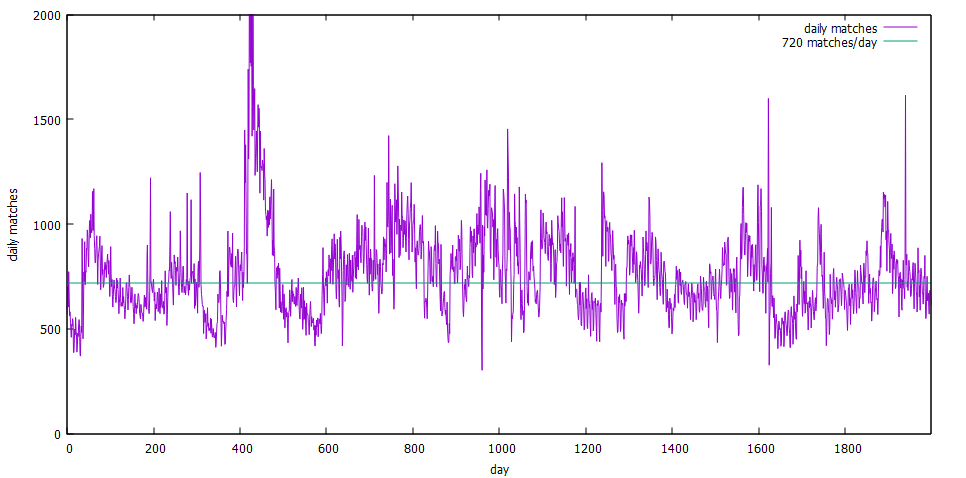

View tag filter target

The filter target should be 480 enotes/day. Because the view tag filter rate must be a power of 2, this will actually result in a range from 480 to 960 enotes per day depending on the tx volume. If we "average the averages" over all possible values of tx volume, this will give a mean of 720 enote matches per day, or roughly 1 match per block, which is what was suggested by @jeffro256. I think this is close to the upper limit of what is acceptable for light wallet clients (~200 KB/day) and should provide a good number of false positives even if there was a short term drop in tx volume.

The fomula to calculate the view tag size in bits is:

where

num_outputs_100kis the total number of outputs in the last 100 000 blocks. Thetrunc(log2(x))function can be easily calculated using only integer operations (it's basically the position of the most significant bit).As an example, the value of

num_outputs_100kis currently about 7.9 million, which results in a view tag size of 6 bits when plugged into the formula. With around 56000 daily outputs, there will be about 880 matches per day. If the long-term daily volume increases to about 62000 ouputs, the view tag size will be increased to 7 bits and the number of matches will drop to 480 per day.View tag size encoding

The view tag size must be encoded explicitly to avoid UX issues with missed transactions at times when the view tag size changes. This can be done with a 1-byte field per transaction (all outputs will use the same tag size).

I'm proposing a range of valid values for the tag size between 1 and 16 bits (instead of the previously proposed 5-20 bits).

A 1-bit view tag requires

num_outputs_100k > 133333. Since there are always at least 100k coinbase outputs, the 1-bit view tag would be "too large" only if there were fewer than 120 transactions per day, which hasn't happened on mainnet except for a few weeks shortly after launch in 2014.A 17-bit view tag that would overflow the supported range would require

num_outputs_100k > 8738133333, an increase of more than 1000x over the current tx volume. If this somehow happened, the number of false positives would exceed 960 per day, which would only have performance implications for light wallets, but would not cause any privacy problems.So the proposed range of 1-16 bits is sufficient.

Complementary view tag

Regardless of the

tag_size, the view tag is always encoded in 2 bytes as a 16-bit integer per enote. The remaining bits are filled with a "complementary" view tag calculated froms^sr_1, which needs a different private key.For example, with

tag_size = 6, the 16 bits would beCCCCCCCCCCTTTTTT, whereTis a view tag bit andCis a complementary view tag bit. This construction ensures that only a fewK_orecomputations are needed per 65536 enotes.K_oK_oK_oK_oA 3rd party scanner would need to be provided with the view-received key

d_vrin order to calculate the full 16-bit view tag for normal enotes. There are 3 deterrents against such usage: